A quantitative analysis of Shobu

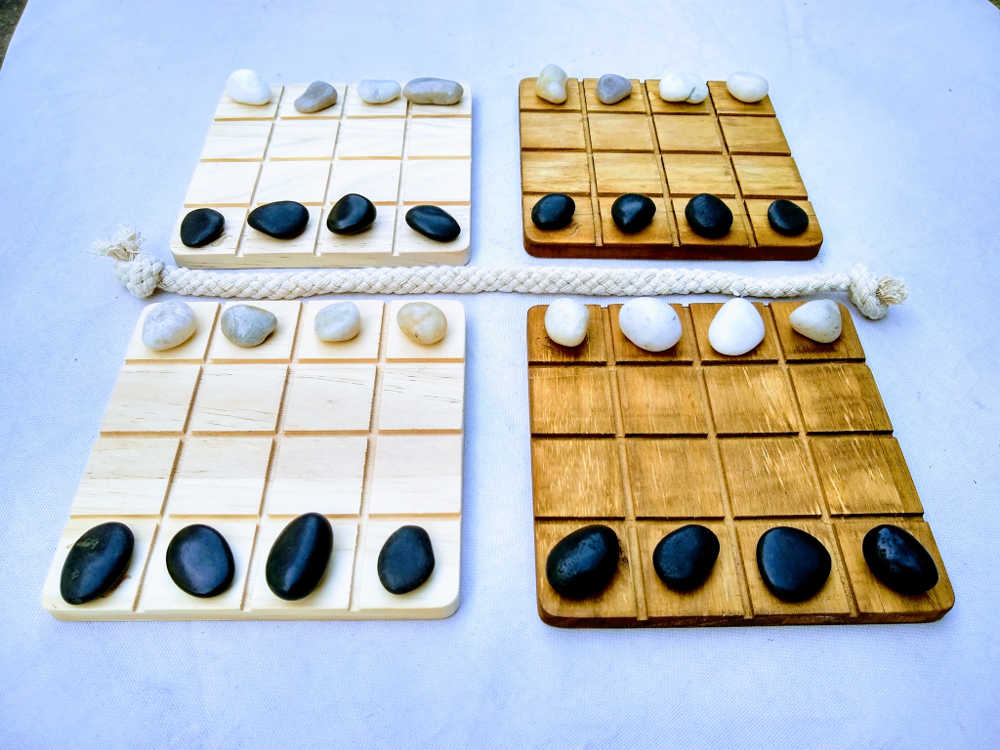

Last year at PAX Unplugged I was hoping to find a relatively simple board game that would be amenable to simulation and quantitative analysis. I found such a suitable game, called SHŌBU (which I will write as Shobu from here out) published by Smirk & Laughter games.

Shobu is a delightfully simple two player board game in the vein of Checkers, Chess, Go and the like. You can learn to play in about five minutes, but this is a game that you could spend years mastering. This article isn’t going to be a review of the game (suffice to say I enjoy playing it) but more a look at how I simulated the game to generate a dataset of played games for further analysis.

Inspiration

I began this project by taking a look at the Halite 3 game engine. The Halite engine was used by Two Sigma corporation for their yearly AI competition, and it had properties similar to what I needed. Specifically it sported a game engine for handling & enforcing turn-to-turn rules, as well as communicating with AI agents that could be written in any language. The Two Sigma team did this by writing an engine who’s only responsibility is taking the desired actions of a “player” (or AI in this case) as input, processing the turn by enforcing the rules and advancing the game state, then outputting the new game state to the next “player” in the turn sequence. This process repeats for each AI agent until someone wins the game, or the turn limit is reached.

This was the same basic architecture that I used to develop my Shobu engine. One process is responsible for advancing the game state based on actions chosen by the AI players, and the AI players run as separate processes communicating with the game engine via STDIN and STDOUT. There is more technical information on the engine available in the source code README which you can find a link to at the end of the article.

Generating the dataset

To do any analysis on games of Shobu, I needed to collect recordings of lots of games. To avoid any bias in strategy, I wrote a very basic Shobu AI which picks a random move from the list of all possible legal moves on each turn. Additionally I chose to limit my collection to games which ended in 50 turns or less since “real” games are rarely this long, and it would allow me to eliminate lots of noise in the data.

Having chosen these criteria I set up a small cluster of computers to start simulating these randomly played games, and keeping those which ended in 50 moves or less. The simulation instances running on 20 CPU cores (of varying performance) took about 6 weeks to generate the 104,396 games that I ended up keeping. With each player making random moves only about 1.5% of games ended in less than 50 turns, so I threw out a whole lot of games over the course of this procedure.

Stalemates

I didn’t analyze the game terribly deeply, but I did find some interesting points about the game. Perhaps the first obvious question to answer is “are stalemates possible”? Yes, it’s possible to reach a stalemate but it doesn’t happen very often. Here’s an example of one such “inside-outside” stalemate board configuration:

...x|...o ....|...o o...|.... .o..|x... --------- ...x|...o ...x|.... ....|.x.. o...|....

It may be possible to reach a stalemate in other ways, but this is the only type that I observed. All of one players stones are in an “inside” column and the other players stones form two “outside” columns. This isn’t such a stalemate where each player can trivially counter each other’s moves either, it’s simply no longer possible for either player to knock another players stone off any board.

Black advantage

The dataset showed that in a game between two equally skilled players, the black stone player (who moves first at the start of the game) has a slight advantage. The dataset showed a win rate of 53% for the black player, and 47% for the white player.

Home board advantage

It can also be seen in this dataset that the “winning board” (that is, the board on which the winning move is made) is not equally likely to be any of the four boards. Winning turns were significantly more likely to be made on one of a players home boards than otherwise.

Winning board in games the black player won:

White home board 11% | 14% ----|---- 43% | 31% Black home board

Winning board in games the white player won:

White home board 36% | 36% ----|---- 14% | 14% Black home board

Given that the players made entirely random moves, one might extrapolate that it is significantly easier to win by playing aggressively on your home boards rather than your opponents. Perhaps there is a way to counter this strategy, but finding it will be left as an exercise for the reader.

You may also notice that the lower left quadrant seems more likely to win for the black player. This is likely not the case, instead I expect it is a side effect of that quadrant being the location of the first move in every game in my analysis. The dataset is indeed composed of truly random games, but for the purpose of analysing opening moves I flipped games across the vertical axis so that all opening moves happened in the lower left quadrant. This is so that symmetrical/equivalent opeining moves by the black player would not be double counted. On the flip side (pun intended), this could mean that a win is most likely to occur in the quadrant where the first move is made.

Opening moves

There are 116 possible opening moves in a game of Shobu. Of these, one opening move (which can be played four ways when you account for symmetry) led to ~7% more wins (596 versus 556 wins) than the next most succesful opening moves. That doesn’t sound like much, but when you consider that the gap between the most successful opening move and the least successful is ~37% (596 versus 408 wins) you can see that it is a noteworthy difference. The opening move is this:

oooo|oooo ....|..x. ....|.... xxxx|.xxx --------- oooo|oooo ..x.|.... ....|.... .xxx|xxxx

I cannot say that this would be a particularly effective opening move against any skilled human player, but it is an interesting data point to think about.

Links to source

If you’re interested in doing some analysis on this data yourself, or perhaps you’re interested in developing an AI agent to explore Shobu strategy you can find the data set and engine source code below:

Shobu 104,396 randomly played games dataset: https://www.kaggle.com/bsfoltz/shobu-randomly-played-games-104k

Shobu AI Playground (game engine): https://github.com/JayWalker512/Shobu

Finally if you’re interested in playing Shobu yourself, you can buy it here: https://www.smirkandlaughter.com/shobu

Super cool project dude! I am generating a similar data set for a project of my own in JAva. I am not restricting my data set to the games that take less than 50 turns. Thank you for showing me that there are stalemates so I can change my code to account for those. Really liked your findings and have similar results for win rate between black and white. Would love to ask you some questions about it if you have some time.

Thanks,

Spencer